- Privacy Policy

Home » Data Analysis – Process, Methods and Types

Data Analysis – Process, Methods and Types

Table of Contents

Data analysis is the systematic process of inspecting, cleaning, transforming, and modeling data to uncover meaningful insights, support decision-making, and solve specific problems. In today’s data-driven world, data analysis is crucial for businesses, researchers, and policymakers to interpret trends, predict outcomes, and make informed decisions. This article delves into the data analysis process, commonly used methods, and the different types of data analysis.

Data Analysis

Data analysis involves the application of statistical, mathematical, and computational techniques to make sense of raw data. It transforms unorganized data into actionable information, often through visualizations, statistical summaries, or predictive models.

For example, analyzing sales data over time can help a retailer understand seasonal trends and forecast future demand.

Importance of Data Analysis

- Informed Decision-Making: Helps stakeholders make evidence-based choices.

- Problem Solving: Identifies patterns, relationships, and anomalies in data.

- Efficiency Improvement: Optimizes processes and operations through insights.

- Strategic Planning: Assists in setting realistic goals and forecasting outcomes.

Data Analysis Process

The process of data analysis typically follows a structured approach to ensure accuracy and reliability.

1. Define Objectives

Clearly articulate the research question or business problem you aim to address.

- Example: A company wants to analyze customer satisfaction to improve its services.

2. Data Collection

Gather relevant data from various sources, such as surveys, databases, or APIs.

- Example: Collect customer feedback through online surveys and customer service logs.

3. Data Cleaning

Prepare the data for analysis by removing errors, duplicates, and inconsistencies.

- Example: Handle missing values, correct typos, and standardize formats.

4. Data Exploration

Perform exploratory data analysis (EDA) to understand data patterns, distributions, and relationships.

- Example: Use summary statistics and visualizations like histograms or scatter plots.

5. Data Transformation

Transform raw data into a usable format by scaling, encoding, or aggregating.

- Example: Convert categorical data into numerical values for machine learning algorithms.

6. Analysis and Interpretation

Apply appropriate methods or models to analyze the data and extract insights.

- Example: Use regression analysis to predict customer churn rates.

7. Reporting and Visualization

Present findings in a clear and actionable format using dashboards, charts, or reports.

- Example: Create a dashboard summarizing customer satisfaction scores by region.

8. Decision-Making and Implementation

Use the insights to make recommendations or implement strategies.

- Example: Launch targeted marketing campaigns based on customer preferences.

Methods of Data Analysis

1. statistical methods.

- Descriptive Statistics: Summarizes data using measures like mean, median, and standard deviation.

- Inferential Statistics: Draws conclusions or predictions from sample data using techniques like hypothesis testing or confidence intervals.

2. Data Mining

Data mining involves discovering patterns, correlations, and anomalies in large datasets.

- Example: Identifying purchasing patterns in retail through association rules.

3. Machine Learning

Applies algorithms to build predictive models and automate decision-making.

- Example: Using supervised learning to classify email spam.

4. Text Analysis

Analyzes textual data to extract insights, often used in sentiment analysis or topic modeling.

- Example: Analyzing customer reviews to understand product sentiment.

5. Time-Series Analysis

Focuses on analyzing data points collected over time to identify trends and patterns.

- Example: Forecasting stock prices based on historical data.

6. Data Visualization

Transforms data into visual representations like charts, graphs, and heatmaps to make findings comprehensible.

- Example: Using bar charts to compare monthly sales performance.

7. Predictive Analytics

Uses statistical models and machine learning to forecast future outcomes based on historical data.

- Example: Predicting the likelihood of equipment failure in a manufacturing plant.

8. Diagnostic Analysis

Focuses on identifying causes of observed patterns or trends in data.

- Example: Investigating why sales dropped in a particular quarter.

Types of Data Analysis

1. descriptive analysis.

- Purpose: Summarizes raw data to provide insights into past trends and performance.

- Example: Analyzing average customer spending per month.

2. Exploratory Analysis

- Purpose: Identifies patterns, relationships, or hypotheses for further study.

- Example: Exploring correlations between advertising spend and sales.

3. Inferential Analysis

- Purpose: Draws conclusions or makes predictions about a population based on sample data.

- Example: Estimating national voter preferences using survey data.

4. Diagnostic Analysis

- Purpose: Examines the reasons behind observed outcomes or trends.

- Example: Investigating why website traffic decreased after a redesign.

5. Predictive Analysis

- Purpose: Forecasts future outcomes based on historical data.

- Example: Predicting customer churn using machine learning algorithms.

6. Prescriptive Analysis

- Purpose: Recommends actions based on data insights and predictive models.

- Example: Suggesting the best marketing channels to maximize ROI.

Tools for Data Analysis

1. programming languages.

- Python: Popular for data manipulation, analysis, and machine learning (e.g., Pandas, NumPy, Scikit-learn).

- R: Ideal for statistical computing and visualization.

2. Data Visualization Tools

- Tableau: Creates interactive dashboards and visualizations.

- Power BI: Microsoft’s tool for business intelligence and reporting.

3. Statistical Software

- SPSS: Used for statistical analysis in social sciences.

- SAS: Advanced analytics, data management, and predictive modeling tool.

4. Big Data Platforms

- Hadoop: Framework for processing large-scale datasets.

- Apache Spark: Fast data processing engine for big data analytics.

5. Spreadsheet Tools

- Microsoft Excel: Widely used for basic data analysis and visualization.

- Google Sheets: Collaborative online spreadsheet tool.

Challenges in Data Analysis

- Data Quality Issues: Missing, inconsistent, or inaccurate data can compromise results.

- Scalability: Analyzing large datasets requires advanced tools and computing power.

- Bias in Data: Skewed datasets can lead to misleading conclusions.

- Complexity: Choosing the appropriate analysis methods and models can be challenging.

Applications of Data Analysis

- Business: Improving customer experience through sales and marketing analytics.

- Healthcare: Analyzing patient data to improve treatment outcomes.

- Education: Evaluating student performance and designing effective teaching strategies.

- Finance: Detecting fraudulent transactions using predictive models.

- Social Science: Understanding societal trends through demographic analysis.

Data analysis is an essential process for transforming raw data into actionable insights. By understanding the process, methods, and types of data analysis, researchers and professionals can effectively tackle complex problems, uncover trends, and make data-driven decisions. With advancements in tools and technology, the scope and impact of data analysis continue to expand, shaping the future of industries and research.

- McKinney, W. (2017). Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython . O’Reilly Media.

- Han, J., Pei, J., & Kamber, M. (2011). Data Mining: Concepts and Techniques . Morgan Kaufmann.

- Provost, F., & Fawcett, T. (2013). Data Science for Business: What You Need to Know About Data Mining and Data-Analytic Thinking . O’Reilly Media.

- Montgomery, D. C., & Runger, G. C. (2018). Applied Statistics and Probability for Engineers . Wiley.

- Tableau Public (2023). Creating Data Visualizations and Dashboards . Retrieved from https://www.tableau.com.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Objectives – Types, Examples and...

Grounded Theory – Methods, Examples and Guide

Institutional Review Board – Application Sample...

Figures in Research Paper – Examples and Guide

Regression Analysis – Methods, Types and Examples

Content Analysis – Methods, Types and Examples

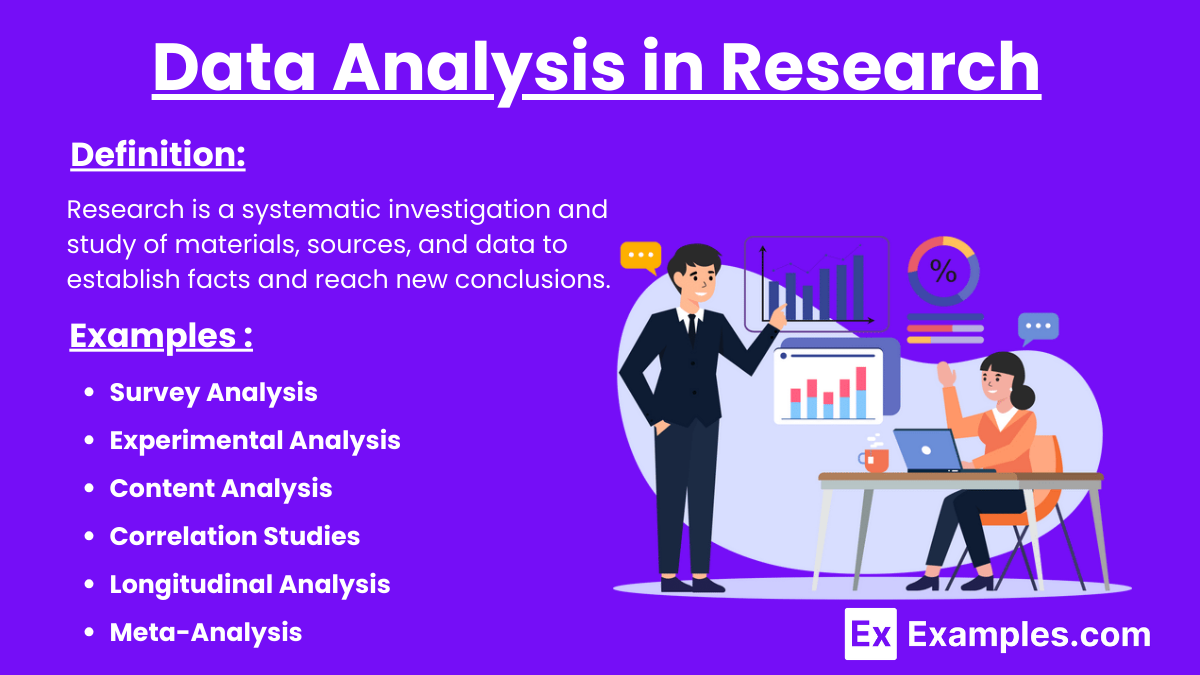

Data Analysis in Research

Ai generator.

Data analysis in research involves systematically applying statistical and logical techniques to describe, illustrate, condense, and evaluate data. It is a crucial step that enables researchers to identify patterns, relationships, and trends within the data, transforming raw information into valuable insights. Through methods such as descriptive statistics, inferential statistics, and qualitative analysis, researchers can interpret their findings, draw conclusions, and support decision-making processes. An effective data analysis plan and robust methodology ensure the accuracy and reliability of research outcomes, ultimately contributing to the advancement of knowledge across various fields.

What is Data Analysis in Research?

Data analysis in research involves using statistical and logical techniques to describe, summarize, and compare collected data. This includes inspecting, cleaning, transforming, and modeling data to find useful information and support decision-making. Quantitative data provides measurable insights, and a solid research design ensures accuracy and reliability. This process helps validate hypotheses, identify patterns, and make informed conclusions, making it a crucial step in the scientific method.

Examples of Data analysis in Research

- Survey Analysis : Researchers collect survey responses from a sample population to gauge opinions, behaviors, or characteristics. Using descriptive statistics, they summarize the data through means, medians, and modes, and then inferential statistics to generalize findings to a larger population.

- Experimental Analysis : In scientific experiments, researchers manipulate one or more variables to observe the effect on a dependent variable. Data is analyzed using methods such as ANOVA or regression analysis to determine if changes in the independent variable(s) significantly affect the dependent variable.

- Content Analysis : Qualitative research often involves analyzing textual data, such as interview transcripts or open-ended survey responses. Researchers code the data to identify recurring themes, patterns, and categories, providing a deeper understanding of the subject matter.

- Correlation Studies : Researchers explore the relationship between two or more variables using correlation coefficients. For example, a study might examine the correlation between hours of study and academic performance to identify if there is a significant positive relationship.

- Longitudinal Analysis : This type of analysis involves collecting data from the same subjects over a period of time. Researchers analyze this data to observe changes and developments, such as studying the long-term effects of a specific educational intervention on student achievement.

- Meta-Analysis : By combining data from multiple studies, researchers perform a meta-analysis to increase the overall sample size and enhance the reliability of findings. This method helps in synthesizing research results to draw broader conclusions about a particular topic or intervention.

Data analysis in Qualitative Research

Data analysis in qualitative research involves systematically examining non-numeric data, such as interviews, observations, and textual materials, to identify patterns, themes, and meanings. Here are some key steps and methods used in qualitative data analysis:

- Coding : Researchers categorize the data by assigning labels or codes to specific segments of the text. These codes represent themes or concepts relevant to the research question.

- Thematic Analysis : This method involves identifying and analyzing patterns or themes within the data. Researchers review coded data to find recurring topics and construct a coherent narrative around these themes.

- Content Analysis : A systematic approach to categorize verbal or behavioral data to classify, summarize, and tabulate the data. This method often involves counting the frequency of specific words or phrases.

- Narrative Analysis : Researchers focus on the stories and experiences shared by participants, analyzing the structure, content, and context of the narratives to understand how individuals make sense of their experiences.

- Grounded Theory : This method involves generating a theory based on the data collected. Researchers collect and analyze data simultaneously, continually refining and adjusting their theoretical framework as new data emerges.

- Discourse Analysis : Examining language use and communication patterns within the data, researchers analyze how language constructs social realities and power relationships.

- Case Study Analysis : An in-depth analysis of a single case or multiple cases, exploring the complexities and unique aspects of each case to gain a deeper understanding of the phenomenon under study.

Data analysis in Quantitative Research

Data analysis in quantitative research involves the systematic application of statistical techniques to numerical data to identify patterns, relationships, and trends. Here are some common methods used in quantitative data analysis:

- Descriptive Statistics : This includes measures such as mean, median, mode, standard deviation, and range, which summarize and describe the main features of a data set.

- Inferential Statistics : Techniques like t-tests, chi-square tests, and ANOVA (Analysis of Variance) are used to make inferences or generalizations about a population based on a sample.

- Regression Analysis : This method examines the relationship between dependent and independent variables. Simple linear regression analyzes the relationship between two variables, while multiple regression examines the relationship between one dependent variable and several independent variables.

- Correlation Analysis : Researchers use correlation coefficients to measure the strength and direction of the relationship between two variables.

- Factor Analysis : This technique is used to identify underlying relationships between variables by grouping them into factors based on their correlations.

- Cluster Analysis : A method used to group a set of objects or cases into clusters, where objects in the same cluster are more similar to each other than to those in other clusters.

- Hypothesis Testing : This involves testing an assumption or hypothesis about a population parameter. Common tests include z-tests, t-tests, and chi-square tests, which help determine if there is enough evidence to reject the null hypothesis.

- Time Series Analysis : This method analyzes data points collected or recorded at specific time intervals to identify trends, cycles, and seasonal variations.

- Multivariate Analysis : Techniques like MANOVA (Multivariate Analysis of Variance) and PCA (Principal Component Analysis) are used to analyze data that involves multiple variables to understand their effect and relationships.

- Structural Equation Modeling (SEM) : A multivariate statistical analysis technique that is used to analyze structural relationships. This method is a combination of factor analysis and multiple regression analysis and is used to analyze the structural relationship between measured variables and latent constructs.

Data analysis in Research Methodology

Data analysis in research methodology involves the process of systematically applying statistical and logical techniques to describe, condense, recap, and evaluate data. Here are the key components and methods involved:

- Data Preparation : This step includes collecting, cleaning, and organizing raw data. Researchers ensure data quality by handling missing values, removing duplicates, and correcting errors.

- Descriptive Analysis : Researchers use descriptive statistics to summarize the basic features of the data. This includes measures such as mean, median, mode, standard deviation, and graphical representations like histograms and pie charts.

- Inferential Analysis : This involves using statistical tests to make inferences about the population from which the sample was drawn. Common techniques include t-tests, chi-square tests, ANOVA, and regression analysis.

- Qualitative Data Analysis : For non-numeric data, researchers employ methods like coding, thematic analysis, content analysis, narrative analysis, and discourse analysis to identify patterns and themes.

- Quantitative Data Analysis : For numeric data, researchers apply statistical methods such as correlation, regression, factor analysis, cluster analysis, and time series analysis to identify relationships and trends.

- Hypothesis Testing : Researchers test hypotheses using statistical methods to determine whether there is enough evidence to reject the null hypothesis. This involves calculating p-values and confidence intervals.

- Data Interpretation : This step involves interpreting the results of the data analysis. Researchers draw conclusions based on the statistical findings and relate them back to the research questions and objectives.

- Validation and Reliability : Ensuring the validity and reliability of the analysis is crucial. Researchers check for consistency in the results and use methods like cross-validation and reliability testing to confirm their findings.

- Visualization : Effective data visualization techniques, such as charts, graphs, and plots, are used to present the data in a clear and understandable manner, aiding in the interpretation and communication of results.

- Reporting : The final step involves reporting the results in a structured format, often including an introduction, methodology, results, discussion, and conclusion. This report should clearly convey the findings and their implications for the research question.

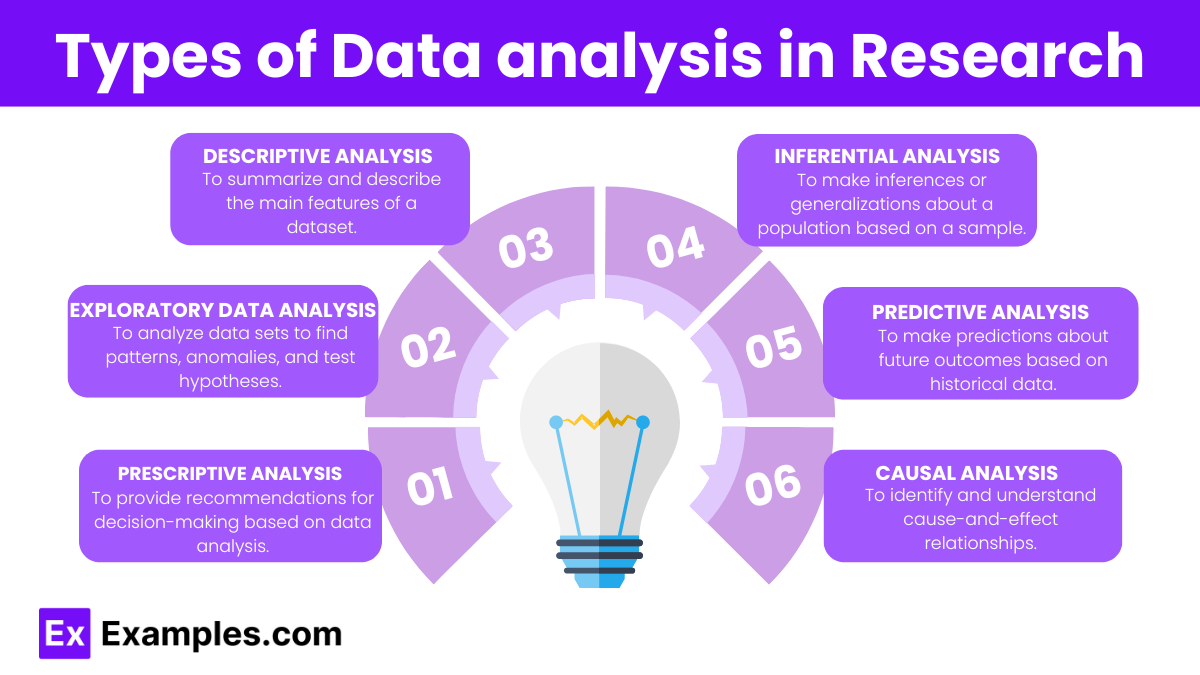

Types of Data analysis in Research

- Purpose : To summarize and describe the main features of a dataset.

- Methods : Mean, median, mode, standard deviation, frequency distributions, and graphical representations like histograms and pie charts.

- Example : Calculating the average test scores of students in a class.

- Purpose : To make inferences or generalizations about a population based on a sample.

- Methods : T-tests, chi-square tests, ANOVA (Analysis of Variance), regression analysis, and confidence intervals.

- Example : Testing whether a new teaching method significantly affects student performance compared to a traditional method.

- Purpose : To analyze data sets to find patterns, anomalies, and test hypotheses.

- Methods : Visualization techniques like box plots, scatter plots, and heat maps; summary statistics.

- Example : Visualizing the relationship between hours of study and exam scores using a scatter plot.

- Purpose : To make predictions about future outcomes based on historical data.

- Methods : Regression analysis, machine learning algorithms (e.g., decision trees, neural networks), and time series analysis.

- Example : Predicting student graduation rates based on their academic performance and demographic data.

- Purpose : To provide recommendations for decision-making based on data analysis.

- Methods : Optimization algorithms, simulation, and decision analysis.

- Example : Suggesting the best course of action for improving student retention rates based on various predictive factors.

- Purpose : To identify and understand cause-and-effect relationships.

- Methods : Controlled experiments, regression analysis, path analysis, and structural equation modeling (SEM).

- Example : Determining the impact of a specific intervention, like a new curriculum, on student learning outcomes.

- Purpose : To understand the specific mechanisms through which variables affect one another.

- Methods : Detailed modeling and simulation, often used in scientific research to understand biological or physical processes.

- Example : Studying how a specific drug interacts with biological pathways to affect patient health.

How to write Data analysis in Research

Data analysis is crucial for interpreting collected data and drawing meaningful conclusions. Follow these steps to write an effective data analysis section in your research.

1. Prepare Your Data

Ensure your data is clean and organized:

- Remove duplicates and irrelevant data.

- Check for errors and correct them.

- Categorize data if necessary.

2. Choose the Right Analysis Method

Select a method that fits your data type and research question:

- Quantitative Data : Use statistical analysis such as t-tests, ANOVA, regression analysis.

- Qualitative Data : Use thematic analysis, content analysis, or narrative analysis.

3. Describe Your Analytical Techniques

Clearly explain the methods you used:

- Software and Tools : Mention any software (e.g., SPSS, NVivo) used.

- Statistical Tests : Detail the statistical tests applied, such as chi-square tests or correlation analysis.

- Qualitative Techniques : Describe coding and theme identification processes.

4. Present Your Findings

Organize your findings logically:

- Use Tables and Figures : Display data in tables, graphs, and charts for clarity.

- Summarize Key Results : Highlight the most significant findings.

- Include Relevant Statistics : Report p-values, confidence intervals, means, and standard deviations.

5. Interpret the Results

Explain what your findings mean in the context of your research:

- Compare with Hypotheses : State whether the results support your hypotheses.

- Relate to Literature : Compare your results with previous studies.

- Discuss Implications : Explain the significance of your findings.

6. Discuss Limitations

Acknowledge any limitations in your data or analysis:

- Sample Size : Note if the sample size was small.

- Biases : Mention any potential biases in data collection.

- External Factors : Discuss any factors that might have influenced the results.

7. Conclude with a Summary

Wrap up your data analysis section:

- Restate Key Findings : Briefly summarize the main results.

- Future Research : Suggest areas for further investigation.

Importance of Data analysis in Research

Data analysis is a fundamental component of the research process. Here are five key points highlighting its importance:

- Enhances Accuracy and Reliability Data analysis ensures that research findings are accurate and reliable. By using statistical techniques, researchers can minimize errors and biases, ensuring that the results are dependable.

- Facilitates Informed Decision-Making Through data analysis, researchers can make informed decisions based on empirical evidence. This is crucial in fields like healthcare, business, and social sciences, where decisions impact policies, strategies, and outcomes.

- Identifies Trends and Patterns Analyzing data helps researchers uncover trends and patterns that might not be immediately visible. These insights can lead to new hypotheses and areas of study, advancing knowledge in the field.

- Supports Hypothesis Testing Data analysis is vital for testing hypotheses. Researchers can use statistical methods to determine whether their hypotheses are supported or refuted, which is essential for validating theories and advancing scientific understanding.

- Provides a Basis for Predictions By analyzing current and historical data, researchers can develop models that predict future outcomes. This predictive capability is valuable in numerous fields, including economics, climate science, and public health.

FAQ’s

What is the difference between qualitative and quantitative data analysis.

Qualitative analysis focuses on non-numerical data to understand concepts, while quantitative analysis deals with numerical data to identify patterns and relationships.

What is descriptive statistics?

Descriptive statistics summarize and describe the features of a data set, including measures like mean, median, mode, and standard deviation.

What is inferential statistics?

Inferential statistics use sample data to make generalizations about a larger population, often through hypothesis testing and confidence intervals.

What is regression analysis?

Regression analysis examines the relationship between dependent and independent variables, helping to predict outcomes and understand variable impacts.

What is the role of software in data analysis?

Software like SPSS, R, and Excel facilitate data analysis by providing tools for statistical calculations, visualization, and data management.

What are data visualization techniques?

Data visualization techniques include charts, graphs, and maps, which help in presenting data insights clearly and effectively.

What is data cleaning?

Data cleaning involves removing errors, inconsistencies, and missing values from a data set to ensure accuracy and reliability in analysis.

What is the significance of sample size in data analysis?

Sample size affects the accuracy and generalizability of results; larger samples generally provide more reliable insights.

How does correlation differ from causation?

Correlation indicates a relationship between variables, while causation implies one variable directly affects the other.

What are the ethical considerations in data analysis?

Ethical considerations include ensuring data privacy, obtaining informed consent, and avoiding data manipulation or misrepresentation.

Text prompt

- Instructive

- Professional

10 Examples of Public speaking

20 Examples of Gas lighting

Data & Finance for Work & Life

Data Analysis: Types, Methods & Techniques (a Complete List)

( Updated Version )

While the term sounds intimidating, “data analysis” is nothing more than making sense of information in a table. It consists of filtering, sorting, grouping, and manipulating data tables with basic algebra and statistics.

In fact, you don’t need experience to understand the basics. You have already worked with data extensively in your life, and “analysis” is nothing more than a fancy word for good sense and basic logic.

Over time, people have intuitively categorized the best logical practices for treating data. These categories are what we call today types , methods , and techniques .

This article provides a comprehensive list of types, methods, and techniques, and explains the difference between them.

For a practical intro to data analysis (including types, methods, & techniques), check out our Intro to Data Analysis eBook for free.

Descriptive, Diagnostic, Predictive, & Prescriptive Analysis

If you Google “types of data analysis,” the first few results will explore descriptive , diagnostic , predictive , and prescriptive analysis. Why? Because these names are easy to understand and are used a lot in “the real world.”

Descriptive analysis is an informational method, diagnostic analysis explains “why” a phenomenon occurs, predictive analysis seeks to forecast the result of an action, and prescriptive analysis identifies solutions to a specific problem.

That said, these are only four branches of a larger analytical tree.

Good data analysts know how to position these four types within other analytical methods and tactics, allowing them to leverage strengths and weaknesses in each to uproot the most valuable insights.

Let’s explore the full analytical tree to understand how to appropriately assess and apply these four traditional types.

Tree diagram of Data Analysis Types, Methods, and Techniques

Here’s a picture to visualize the structure and hierarchy of data analysis types, methods, and techniques.

If it’s too small you can view the picture in a new tab . Open it to follow along!

Note: basic descriptive statistics such as mean , median , and mode , as well as standard deviation , are not shown because most people are already familiar with them. In the diagram, they would fall under the “descriptive” analysis type.

Tree Diagram Explained

The highest-level classification of data analysis is quantitative vs qualitative . Quantitative implies numbers while qualitative implies information other than numbers.

Quantitative data analysis then splits into mathematical analysis and artificial intelligence (AI) analysis . Mathematical types then branch into descriptive , diagnostic , predictive , and prescriptive .

Methods falling under mathematical analysis include clustering , classification , forecasting , and optimization . Qualitative data analysis methods include content analysis , narrative analysis , discourse analysis , framework analysis , and/or grounded theory .

Moreover, mathematical techniques include regression , Nïave Bayes , Simple Exponential Smoothing , cohorts , factors , linear discriminants , and more, whereas techniques falling under the AI type include artificial neural networks , decision trees , evolutionary programming , and fuzzy logic . Techniques under qualitative analysis include text analysis , coding , idea pattern analysis , and word frequency .

It’s a lot to remember! Don’t worry, once you understand the relationship and motive behind all these terms, it’ll be like riding a bike.

We’ll move down the list from top to bottom and I encourage you to open the tree diagram above in a new tab so you can follow along .

But first, let’s just address the elephant in the room: what’s the difference between methods and techniques anyway?

Difference between methods and techniques

Though often used interchangeably, methods ands techniques are not the same. By definition, methods are the process by which techniques are applied, and techniques are the practical application of those methods.

For example, consider driving. Methods include staying in your lane, stopping at a red light, and parking in a spot. Techniques include turning the steering wheel, braking, and pushing the gas pedal.

Data sets: observations and fields

It’s important to understand the basic structure of data tables to comprehend the rest of the article. A data set consists of one far-left column containing observations, then a series of columns containing the fields (aka “traits” or “characteristics”) that describe each observations. For example, imagine we want a data table for fruit. It might look like this:

Now let’s turn to types, methods, and techniques. Each heading below consists of a description, relative importance, the nature of data it explores, and the motivation for using it.

Quantitative Analysis

- It accounts for more than 50% of all data analysis and is by far the most widespread and well-known type of data analysis.

- As you have seen, it holds descriptive, diagnostic, predictive, and prescriptive methods, which in turn hold some of the most important techniques available today, such as clustering and forecasting.

- It can be broken down into mathematical and AI analysis.

- Importance : Very high . Quantitative analysis is a must for anyone interesting in becoming or improving as a data analyst.

- Nature of Data: data treated under quantitative analysis is, quite simply, quantitative. It encompasses all numeric data.

- Motive: to extract insights. (Note: we’re at the top of the pyramid, this gets more insightful as we move down.)

Qualitative Analysis

- It accounts for less than 30% of all data analysis and is common in social sciences .

- It can refer to the simple recognition of qualitative elements, which is not analytic in any way, but most often refers to methods that assign numeric values to non-numeric data for analysis.

- Because of this, some argue that it’s ultimately a quantitative type.

- Importance: Medium. In general, knowing qualitative data analysis is not common or even necessary for corporate roles. However, for researchers working in social sciences, its importance is very high .

- Nature of Data: data treated under qualitative analysis is non-numeric. However, as part of the analysis, analysts turn non-numeric data into numbers, at which point many argue it is no longer qualitative analysis.

- Motive: to extract insights. (This will be more important as we move down the pyramid.)

Mathematical Analysis

- Description: mathematical data analysis is a subtype of qualitative data analysis that designates methods and techniques based on statistics, algebra, and logical reasoning to extract insights. It stands in opposition to artificial intelligence analysis.

- Importance: Very High. The most widespread methods and techniques fall under mathematical analysis. In fact, it’s so common that many people use “quantitative” and “mathematical” analysis interchangeably.

- Nature of Data: numeric. By definition, all data under mathematical analysis are numbers.

- Motive: to extract measurable insights that can be used to act upon.

Artificial Intelligence & Machine Learning Analysis

- Description: artificial intelligence and machine learning analyses designate techniques based on the titular skills. They are not traditionally mathematical, but they are quantitative since they use numbers. Applications of AI & ML analysis techniques are developing, but they’re not yet mainstream enough to show promise across the field.

- Importance: Medium . As of today (September 2020), you don’t need to be fluent in AI & ML data analysis to be a great analyst. BUT, if it’s a field that interests you, learn it. Many believe that in 10 year’s time its importance will be very high .

- Nature of Data: numeric.

- Motive: to create calculations that build on themselves in order and extract insights without direct input from a human.

Descriptive Analysis

- Description: descriptive analysis is a subtype of mathematical data analysis that uses methods and techniques to provide information about the size, dispersion, groupings, and behavior of data sets. This may sounds complicated, but just think about mean, median, and mode: all three are types of descriptive analysis. They provide information about the data set. We’ll look at specific techniques below.

- Importance: Very high. Descriptive analysis is among the most commonly used data analyses in both corporations and research today.

- Nature of Data: the nature of data under descriptive statistics is sets. A set is simply a collection of numbers that behaves in predictable ways. Data reflects real life, and there are patterns everywhere to be found. Descriptive analysis describes those patterns.

- Motive: the motive behind descriptive analysis is to understand how numbers in a set group together, how far apart they are from each other, and how often they occur. As with most statistical analysis, the more data points there are, the easier it is to describe the set.

Diagnostic Analysis

- Description: diagnostic analysis answers the question “why did it happen?” It is an advanced type of mathematical data analysis that manipulates multiple techniques, but does not own any single one. Analysts engage in diagnostic analysis when they try to explain why.

- Importance: Very high. Diagnostics are probably the most important type of data analysis for people who don’t do analysis because they’re valuable to anyone who’s curious. They’re most common in corporations, as managers often only want to know the “why.”

- Nature of Data : data under diagnostic analysis are data sets. These sets in themselves are not enough under diagnostic analysis. Instead, the analyst must know what’s behind the numbers in order to explain “why.” That’s what makes diagnostics so challenging yet so valuable.

- Motive: the motive behind diagnostics is to diagnose — to understand why.

Predictive Analysis

- Description: predictive analysis uses past data to project future data. It’s very often one of the first kinds of analysis new researchers and corporate analysts use because it is intuitive. It is a subtype of the mathematical type of data analysis, and its three notable techniques are regression, moving average, and exponential smoothing.

- Importance: Very high. Predictive analysis is critical for any data analyst working in a corporate environment. Companies always want to know what the future will hold — especially for their revenue.

- Nature of Data: Because past and future imply time, predictive data always includes an element of time. Whether it’s minutes, hours, days, months, or years, we call this time series data . In fact, this data is so important that I’ll mention it twice so you don’t forget: predictive analysis uses time series data .

- Motive: the motive for investigating time series data with predictive analysis is to predict the future in the most analytical way possible.

Prescriptive Analysis

- Description: prescriptive analysis is a subtype of mathematical analysis that answers the question “what will happen if we do X?” It’s largely underestimated in the data analysis world because it requires diagnostic and descriptive analyses to be done before it even starts. More than simple predictive analysis, prescriptive analysis builds entire data models to show how a simple change could impact the ensemble.

- Importance: High. Prescriptive analysis is most common under the finance function in many companies. Financial analysts use it to build a financial model of the financial statements that show how that data will change given alternative inputs.

- Nature of Data: the nature of data in prescriptive analysis is data sets. These data sets contain patterns that respond differently to various inputs. Data that is useful for prescriptive analysis contains correlations between different variables. It’s through these correlations that we establish patterns and prescribe action on this basis. This analysis cannot be performed on data that exists in a vacuum — it must be viewed on the backdrop of the tangibles behind it.

- Motive: the motive for prescriptive analysis is to establish, with an acceptable degree of certainty, what results we can expect given a certain action. As you might expect, this necessitates that the analyst or researcher be aware of the world behind the data, not just the data itself.

Clustering Method

- Description: the clustering method groups data points together based on their relativeness closeness to further explore and treat them based on these groupings. There are two ways to group clusters: intuitively and statistically (or K-means).

- Importance: Very high. Though most corporate roles group clusters intuitively based on management criteria, a solid understanding of how to group them mathematically is an excellent descriptive and diagnostic approach to allow for prescriptive analysis thereafter.

- Nature of Data : the nature of data useful for clustering is sets with 1 or more data fields. While most people are used to looking at only two dimensions (x and y), clustering becomes more accurate the more fields there are.

- Motive: the motive for clustering is to understand how data sets group and to explore them further based on those groups.

- Here’s an example set:

Classification Method

- Description: the classification method aims to separate and group data points based on common characteristics . This can be done intuitively or statistically.

- Importance: High. While simple on the surface, classification can become quite complex. It’s very valuable in corporate and research environments, but can feel like its not worth the work. A good analyst can execute it quickly to deliver results.

- Nature of Data: the nature of data useful for classification is data sets. As we will see, it can be used on qualitative data as well as quantitative. This method requires knowledge of the substance behind the data, not just the numbers themselves.

- Motive: the motive for classification is group data not based on mathematical relationships (which would be clustering), but by predetermined outputs. This is why it’s less useful for diagnostic analysis, and more useful for prescriptive analysis.

Forecasting Method

- Description: the forecasting method uses time past series data to forecast the future.

- Importance: Very high. Forecasting falls under predictive analysis and is arguably the most common and most important method in the corporate world. It is less useful in research, which prefers to understand the known rather than speculate about the future.

- Nature of Data: data useful for forecasting is time series data, which, as we’ve noted, always includes a variable of time.

- Motive: the motive for the forecasting method is the same as that of prescriptive analysis: the confidently estimate future values.

Optimization Method

- Description: the optimization method maximized or minimizes values in a set given a set of criteria. It is arguably most common in prescriptive analysis. In mathematical terms, it is maximizing or minimizing a function given certain constraints.

- Importance: Very high. The idea of optimization applies to more analysis types than any other method. In fact, some argue that it is the fundamental driver behind data analysis. You would use it everywhere in research and in a corporation.

- Nature of Data: the nature of optimizable data is a data set of at least two points.

- Motive: the motive behind optimization is to achieve the best result possible given certain conditions.

Content Analysis Method

- Description: content analysis is a method of qualitative analysis that quantifies textual data to track themes across a document. It’s most common in academic fields and in social sciences, where written content is the subject of inquiry.

- Importance: High. In a corporate setting, content analysis as such is less common. If anything Nïave Bayes (a technique we’ll look at below) is the closest corporations come to text. However, it is of the utmost importance for researchers. If you’re a researcher, check out this article on content analysis .

- Nature of Data: data useful for content analysis is textual data.

- Motive: the motive behind content analysis is to understand themes expressed in a large text

Narrative Analysis Method

- Description: narrative analysis is a method of qualitative analysis that quantifies stories to trace themes in them. It’s differs from content analysis because it focuses on stories rather than research documents, and the techniques used are slightly different from those in content analysis (very nuances and outside the scope of this article).

- Importance: Low. Unless you are highly specialized in working with stories, narrative analysis rare.

- Nature of Data: the nature of the data useful for the narrative analysis method is narrative text.

- Motive: the motive for narrative analysis is to uncover hidden patterns in narrative text.

Discourse Analysis Method

- Description: the discourse analysis method falls under qualitative analysis and uses thematic coding to trace patterns in real-life discourse. That said, real-life discourse is oral, so it must first be transcribed into text.

- Importance: Low. Unless you are focused on understand real-world idea sharing in a research setting, this kind of analysis is less common than the others on this list.

- Nature of Data: the nature of data useful in discourse analysis is first audio files, then transcriptions of those audio files.

- Motive: the motive behind discourse analysis is to trace patterns of real-world discussions. (As a spooky sidenote, have you ever felt like your phone microphone was listening to you and making reading suggestions? If it was, the method was discourse analysis.)

Framework Analysis Method

- Description: the framework analysis method falls under qualitative analysis and uses similar thematic coding techniques to content analysis. However, where content analysis aims to discover themes, framework analysis starts with a framework and only considers elements that fall in its purview.

- Importance: Low. As with the other textual analysis methods, framework analysis is less common in corporate settings. Even in the world of research, only some use it. Strangely, it’s very common for legislative and political research.

- Nature of Data: the nature of data useful for framework analysis is textual.

- Motive: the motive behind framework analysis is to understand what themes and parts of a text match your search criteria.

Grounded Theory Method

- Description: the grounded theory method falls under qualitative analysis and uses thematic coding to build theories around those themes.

- Importance: Low. Like other qualitative analysis techniques, grounded theory is less common in the corporate world. Even among researchers, you would be hard pressed to find many using it. Though powerful, it’s simply too rare to spend time learning.

- Nature of Data: the nature of data useful in the grounded theory method is textual.

- Motive: the motive of grounded theory method is to establish a series of theories based on themes uncovered from a text.

Clustering Technique: K-Means

- Description: k-means is a clustering technique in which data points are grouped in clusters that have the closest means. Though not considered AI or ML, it inherently requires the use of supervised learning to reevaluate clusters as data points are added. Clustering techniques can be used in diagnostic, descriptive, & prescriptive data analyses.

- Importance: Very important. If you only take 3 things from this article, k-means clustering should be part of it. It is useful in any situation where n observations have multiple characteristics and we want to put them in groups.

- Nature of Data: the nature of data is at least one characteristic per observation, but the more the merrier.

- Motive: the motive for clustering techniques such as k-means is to group observations together and either understand or react to them.

Regression Technique

- Description: simple and multivariable regressions use either one independent variable or combination of multiple independent variables to calculate a correlation to a single dependent variable using constants. Regressions are almost synonymous with correlation today.

- Importance: Very high. Along with clustering, if you only take 3 things from this article, regression techniques should be part of it. They’re everywhere in corporate and research fields alike.

- Nature of Data: the nature of data used is regressions is data sets with “n” number of observations and as many variables as are reasonable. It’s important, however, to distinguish between time series data and regression data. You cannot use regressions or time series data without accounting for time. The easier way is to use techniques under the forecasting method.

- Motive: The motive behind regression techniques is to understand correlations between independent variable(s) and a dependent one.

Nïave Bayes Technique

- Description: Nïave Bayes is a classification technique that uses simple probability to classify items based previous classifications. In plain English, the formula would be “the chance that thing with trait x belongs to class c depends on (=) the overall chance of trait x belonging to class c, multiplied by the overall chance of class c, divided by the overall chance of getting trait x.” As a formula, it’s P(c|x) = P(x|c) * P(c) / P(x).

- Importance: High. Nïave Bayes is a very common, simplistic classification techniques because it’s effective with large data sets and it can be applied to any instant in which there is a class. Google, for example, might use it to group webpages into groups for certain search engine queries.

- Nature of Data: the nature of data for Nïave Bayes is at least one class and at least two traits in a data set.

- Motive: the motive behind Nïave Bayes is to classify observations based on previous data. It’s thus considered part of predictive analysis.

Cohorts Technique

- Description: cohorts technique is a type of clustering method used in behavioral sciences to separate users by common traits. As with clustering, it can be done intuitively or mathematically, the latter of which would simply be k-means.

- Importance: Very high. With regard to resembles k-means, the cohort technique is more of a high-level counterpart. In fact, most people are familiar with it as a part of Google Analytics. It’s most common in marketing departments in corporations, rather than in research.

- Nature of Data: the nature of cohort data is data sets in which users are the observation and other fields are used as defining traits for each cohort.

- Motive: the motive for cohort analysis techniques is to group similar users and analyze how you retain them and how the churn.

Factor Technique

- Description: the factor analysis technique is a way of grouping many traits into a single factor to expedite analysis. For example, factors can be used as traits for Nïave Bayes classifications instead of more general fields.

- Importance: High. While not commonly employed in corporations, factor analysis is hugely valuable. Good data analysts use it to simplify their projects and communicate them more clearly.

- Nature of Data: the nature of data useful in factor analysis techniques is data sets with a large number of fields on its observations.

- Motive: the motive for using factor analysis techniques is to reduce the number of fields in order to more quickly analyze and communicate findings.

Linear Discriminants Technique

- Description: linear discriminant analysis techniques are similar to regressions in that they use one or more independent variable to determine a dependent variable; however, the linear discriminant technique falls under a classifier method since it uses traits as independent variables and class as a dependent variable. In this way, it becomes a classifying method AND a predictive method.

- Importance: High. Though the analyst world speaks of and uses linear discriminants less commonly, it’s a highly valuable technique to keep in mind as you progress in data analysis.

- Nature of Data: the nature of data useful for the linear discriminant technique is data sets with many fields.

- Motive: the motive for using linear discriminants is to classify observations that would be otherwise too complex for simple techniques like Nïave Bayes.

Exponential Smoothing Technique

- Description: exponential smoothing is a technique falling under the forecasting method that uses a smoothing factor on prior data in order to predict future values. It can be linear or adjusted for seasonality. The basic principle behind exponential smoothing is to use a percent weight (value between 0 and 1 called alpha) on more recent values in a series and a smaller percent weight on less recent values. The formula is f(x) = current period value * alpha + previous period value * 1-alpha.

- Importance: High. Most analysts still use the moving average technique (covered next) for forecasting, though it is less efficient than exponential moving, because it’s easy to understand. However, good analysts will have exponential smoothing techniques in their pocket to increase the value of their forecasts.

- Nature of Data: the nature of data useful for exponential smoothing is time series data . Time series data has time as part of its fields .

- Motive: the motive for exponential smoothing is to forecast future values with a smoothing variable.

Moving Average Technique

- Description: the moving average technique falls under the forecasting method and uses an average of recent values to predict future ones. For example, to predict rainfall in April, you would take the average of rainfall from January to March. It’s simple, yet highly effective.

- Importance: Very high. While I’m personally not a huge fan of moving averages due to their simplistic nature and lack of consideration for seasonality, they’re the most common forecasting technique and therefore very important.

- Nature of Data: the nature of data useful for moving averages is time series data .

- Motive: the motive for moving averages is to predict future values is a simple, easy-to-communicate way.

Neural Networks Technique

- Description: neural networks are a highly complex artificial intelligence technique that replicate a human’s neural analysis through a series of hyper-rapid computations and comparisons that evolve in real time. This technique is so complex that an analyst must use computer programs to perform it.

- Importance: Medium. While the potential for neural networks is theoretically unlimited, it’s still little understood and therefore uncommon. You do not need to know it by any means in order to be a data analyst.

- Nature of Data: the nature of data useful for neural networks is data sets of astronomical size, meaning with 100s of 1000s of fields and the same number of row at a minimum .

- Motive: the motive for neural networks is to understand wildly complex phenomenon and data to thereafter act on it.

Decision Tree Technique

- Description: the decision tree technique uses artificial intelligence algorithms to rapidly calculate possible decision pathways and their outcomes on a real-time basis. It’s so complex that computer programs are needed to perform it.

- Importance: Medium. As with neural networks, decision trees with AI are too little understood and are therefore uncommon in corporate and research settings alike.

- Nature of Data: the nature of data useful for the decision tree technique is hierarchical data sets that show multiple optional fields for each preceding field.

- Motive: the motive for decision tree techniques is to compute the optimal choices to make in order to achieve a desired result.

Evolutionary Programming Technique

- Description: the evolutionary programming technique uses a series of neural networks, sees how well each one fits a desired outcome, and selects only the best to test and retest. It’s called evolutionary because is resembles the process of natural selection by weeding out weaker options.

- Importance: Medium. As with the other AI techniques, evolutionary programming just isn’t well-understood enough to be usable in many cases. It’s complexity also makes it hard to explain in corporate settings and difficult to defend in research settings.

- Nature of Data: the nature of data in evolutionary programming is data sets of neural networks, or data sets of data sets.

- Motive: the motive for using evolutionary programming is similar to decision trees: understanding the best possible option from complex data.

- Video example :

Fuzzy Logic Technique

- Description: fuzzy logic is a type of computing based on “approximate truths” rather than simple truths such as “true” and “false.” It is essentially two tiers of classification. For example, to say whether “Apples are good,” you need to first classify that “Good is x, y, z.” Only then can you say apples are good. Another way to see it helping a computer see truth like humans do: “definitely true, probably true, maybe true, probably false, definitely false.”

- Importance: Medium. Like the other AI techniques, fuzzy logic is uncommon in both research and corporate settings, which means it’s less important in today’s world.

- Nature of Data: the nature of fuzzy logic data is huge data tables that include other huge data tables with a hierarchy including multiple subfields for each preceding field.

- Motive: the motive of fuzzy logic to replicate human truth valuations in a computer is to model human decisions based on past data. The obvious possible application is marketing.

Text Analysis Technique

- Description: text analysis techniques fall under the qualitative data analysis type and use text to extract insights.

- Importance: Medium. Text analysis techniques, like all the qualitative analysis type, are most valuable for researchers.

- Nature of Data: the nature of data useful in text analysis is words.

- Motive: the motive for text analysis is to trace themes in a text across sets of very long documents, such as books.

Coding Technique

- Description: the coding technique is used in textual analysis to turn ideas into uniform phrases and analyze the number of times and the ways in which those ideas appear. For this reason, some consider it a quantitative technique as well. You can learn more about coding and the other qualitative techniques here .

- Importance: Very high. If you’re a researcher working in social sciences, coding is THE analysis techniques, and for good reason. It’s a great way to add rigor to analysis. That said, it’s less common in corporate settings.

- Nature of Data: the nature of data useful for coding is long text documents.

- Motive: the motive for coding is to make tracing ideas on paper more than an exercise of the mind by quantifying it and understanding is through descriptive methods.

Idea Pattern Technique

- Description: the idea pattern analysis technique fits into coding as the second step of the process. Once themes and ideas are coded, simple descriptive analysis tests may be run. Some people even cluster the ideas!

- Importance: Very high. If you’re a researcher, idea pattern analysis is as important as the coding itself.

- Nature of Data: the nature of data useful for idea pattern analysis is already coded themes.

- Motive: the motive for the idea pattern technique is to trace ideas in otherwise unmanageably-large documents.

Word Frequency Technique

- Description: word frequency is a qualitative technique that stands in opposition to coding and uses an inductive approach to locate specific words in a document in order to understand its relevance. Word frequency is essentially the descriptive analysis of qualitative data because it uses stats like mean, median, and mode to gather insights.

- Importance: High. As with the other qualitative approaches, word frequency is very important in social science research, but less so in corporate settings.

- Nature of Data: the nature of data useful for word frequency is long, informative documents.

- Motive: the motive for word frequency is to locate target words to determine the relevance of a document in question.

Types of data analysis in research

Types of data analysis in research methodology include every item discussed in this article. As a list, they are:

- Quantitative

- Qualitative

- Mathematical

- Machine Learning and AI

- Descriptive

- Prescriptive

- Classification

- Forecasting

- Optimization

- Grounded theory

- Artificial Neural Networks

- Decision Trees

- Evolutionary Programming

- Fuzzy Logic

- Text analysis

- Idea Pattern Analysis

- Word Frequency Analysis

- Nïave Bayes

- Exponential smoothing

- Moving average

- Linear discriminant

Types of data analysis in qualitative research

As a list, the types of data analysis in qualitative research are the following methods:

Types of data analysis in quantitative research

As a list, the types of data analysis in quantitative research are:

Data analysis methods

As a list, data analysis methods are:

- Content (qualitative)

- Narrative (qualitative)

- Discourse (qualitative)

- Framework (qualitative)

- Grounded theory (qualitative)

Quantitative data analysis methods

As a list, quantitative data analysis methods are:

Tabular View of Data Analysis Types, Methods, and Techniques

About the author.

Noah is the founder & Editor-in-Chief at AnalystAnswers. He is a transatlantic professional and entrepreneur with 5+ years of corporate finance and data analytics experience, as well as 3+ years in consumer financial products and business software. He started AnalystAnswers to provide aspiring professionals with accessible explanations of otherwise dense finance and data concepts. Noah believes everyone can benefit from an analytical mindset in growing digital world. When he's not busy at work, Noah likes to explore new European cities, exercise, and spend time with friends and family.

File available immediately.

Notice: JavaScript is required for this content.

IMAGES

VIDEO