History Of Computers With Timeline [2023 Update]

It’s important to know the history of computers in order to have a good understanding of the field. Computers are one of the most important inventions in human history. Given how fast technology is evolving, you might not expect the history of computers to go back thousands of years. However, that’s exactly the case. But before we go back that far, let’s first understand what a computer actually is.

The First Computers In History

What is a computer.

A computer is simply a machine that follows a set of instructions in order to execute sequences of logical or arithmetic functions. However, when we think of modern computers , we don’t see them as just calculators performing functions. Yet, that’s exactly what they are at their core.

Every time you make a purchase on Amazon or post a picture on Instagram, your computer is executing instructions and processes a massive amount of binary. However, when we consider the definition of a computer, we realize that the history of computers goes far back.

When Was The First Computer Invented?

The history of computers goes back thousands of years with the first one being the abacus . In fact, the earliest abacus, referred to as the Sumerian abacus, dates back to roughly 2700 B.C. from the Mesopotamia region. However, Charles Babbage, the English mathematician and inventor is known as the “Father of Computers.” He created a steam-powered computer known as the Analytical Engine in 1837 which kickstarted computer history.

Digital Vs. Analog Computers

The very first computer, the abacus, is a digital computer because it deals in digits. Today’s computers are also digital because they compute everything using binary: 0’s and 1’s. However, most of the computers between the time of the abacus and modern transistor-based computers were in fact analog computers.

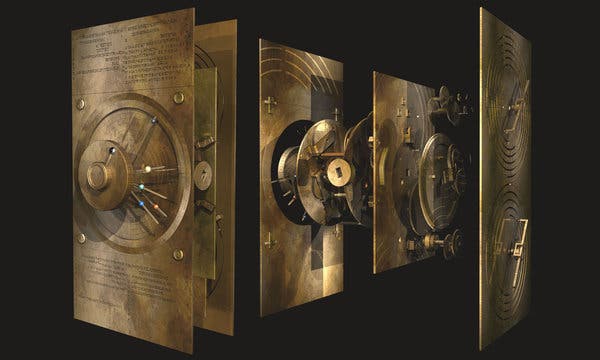

Analog computers, rather than calculating single digits, deal with more complex mathematics and functions. Rather than 1’s and 0’s, analog computers are more often represented by continuously varying quantities. The earliest analog computer, the Antikythera mechanism , is over 2000 years old. These ancient computers paved the way for modern transistor-based computers.

Brief History of Computers

The history of computers goes back as far as 2500 B.C. with the abacus. However, the modern history of computers begins with the Analytical Engine, a steam-powered computer designed in 1837 by English mathematician and “Father of Computers,” Charles Babbage. Yet, the invention of the transistor in 1947, the integrated circuit in 1958, and the microprocessor in 1971 are what made computers much smaller and faster.

In fact, the first personal computer was invented in 1971, the same year as the microprocessor. Then, the first laptop, the Osborne-1 was created a decade later in 1981. Apple and IBM joined the personal computer industry shortly thereafter, popularizing the home PC. Then, when the world wide web came online in 1989, which would eventually serve to connect nearly the whole world.

The 1990s was a booming decade for computer history. IBM produced the first smartphone in 1992 and the first smartwatch was released in 1998. Also, the first-ever quantum computer in history was up and functioning in 1998, if only for a few nanoseconds.

Turn of the Century Computers

The 2000s are the years of social media: the rise and fall of MySpace at the forefront. Facebook took off shortly after and would become one of the popular apps on the iPhone, which was first presented by the legend, Steve Jobs in 2007. It was a pocket-sized computer that was capable of greater computation than the computer which brought mankind to the Moon. The iPad would be released three years later in 2010.

The 2010s seem to have been the decade of Artificial Intelligence and Quantum Computing. Tesla AI-powered self-driving vehicles have made incredible progress toward full autonomy. An AI robot named Sophia was created in 2016 and even gained citizenship in Saudi Arabia in 2017. The world’s first reprogrammable quantum computer was created in 2016, bringing us closer to quantum supremacy.

Timeline Of Computer History

The first digital computer.

2700 B.C: The first digital computer, the Abacus is invented and used around the area of Mesopotamia. Yet, later iterations of the abacus appear in Egypt, Greece, and China, where they’re continually used for hundreds of years. The first abaci were likely used for addition and subtraction which must have been revolutionary for the time. However, the following iterations allowed for more complex calculations.

The First Analog Computer

200 B.C: The first analog computer, the Antikythera mechanism, is created. The Antikythera mechanism was found off the coast of the Greek island of Kythira from which the computer received its name. This find actually baffled most scientists because a computer this advanced wasn’t supposed to exist this long ago. This mechanical analog computer was used by ancient sailors to determine their position in the sea, based on their astrological position.

Binary Number System

1703: Gottfried Wilhelm Leibniz developed the binary number system which is at the heart of modern computing. The binary number system is a way to convert a series of 0’s and 1’s into other numbers, letters, and characters. Everything we see on screen and interact with on our computers is converted into binary before the computer can process it. The magic of present-day computers is that they process binary extremely quickly.

First Programmable Loom

1801: Joseph Jacquard creates a punch-card programmable loom which greatly simplified the weaving process. This allowed those with fewer skills to weave more complicated patterns. However, many didn’t like the idea of simplifying and automating the process as it would displace weaving jobs at the time. Yet, technology persisted and the textile industry would eventually change for the better because of it.

First Steam-Driven Computer

1837: Charles Babbage designed the groundbreaking Analytical Engine . The analytical engine was the first major step toward modern computers. Although it was never actually built, its design embodied the major characteristics of modern computers. This included memory, a central processing unit, and the ability for input and output. Charles Babbage is commonly referred to as the “Father of Computers” for his work.

First Computer Algorithm

1843: Ada Lovelace , the daughter of Lord Byron, worked alongside Charles Babbage to design the analytical engine. However, shortly afterward, she developed the first-ever computer algorithm. She carefully considered what computers were capable of when developing her algorithm. The result was a solution to Bernoulli numbers , a significant mathematical advancement.

First U.S. Census Calculator

1890: Herman Hollerith created a tabulating machine to help calculate the U.S. census. The previous decade’s census took eight years to calculate but with the help of Hollerith’s tabulating machine, it took only six years. With the success of his tabulator, Hollerith then began his own company, the Hollerith Electrical Tabulating System. He applied this same technology to the areas of accounting and inventory.

The Turing Machine

1936: Alan Turing invented the Turing Machine and pushed the limits of what a computer could do at the time. A Turing Machine consists of a tape divided by squares that can contain a single digit, often binary digits, or nothing at all. It also consisted of a machine that could read each digit on the tape and change it. This might not sound like much, but computers to this day emulate this functionality of reading simple binary input and computing a logical output. This relatively simple machine enables the computation of any algorithm.

Turing set the standard for computers regarding them as “ Turing complete ” if they met the standards for simulating a Turing machine. Today’s computers are Turing complete because they simulate the same functionality of Turing machines, however with a much greater processing ability.

The Complex Number Calculator

1940: George Stibitz created the Complex Number Calculator for Bell Labs. It consisted of relays that could recognize the difference between ‘0’ and ‘1’ and therefore, could use binary as the base number system. The final version of the Complex Number Calculator used more than 400 relays and took about two years to create.

First Automatic Computer

1941: Konrad Zuse, a German Computer Scientist, invented the Z3 computer . Zuse’s Z3 was the first programmable fully automatic computer in history. It was much larger than the Complex number calculator and contained more than 2,500 relays. Since the Z3 computer didn’t demonstrate any advantage to the Germans during world war II, the government didn’t provide any funding for it and it was eventually destroyed in the war.

First Electric Digital Computer

1942: Professor John Vincent Atanasoff invented the Atanasoff-Berry Computer (ABC). The ABC was the first automatic electric digital computer in history. It contained over 300 vacuum tubes and solved linear equations but it was not programmable or Turing complete. However, the Atanasoff-Berry Computer will forever hold a place in Computer history.

First Programmable Electronic Digital Computer

1944: British engineer Tommy Flowers and assistants completed the code-breaking Colossus which assisted in decrypting German messages during world war II. It’s held as the first programmable electronic digital computer in history. The Colossus contained more than 1,600 vacuum tubes and thermionic valves in the prototype and 2,400 in the second version, the Mark 2 Colossus.

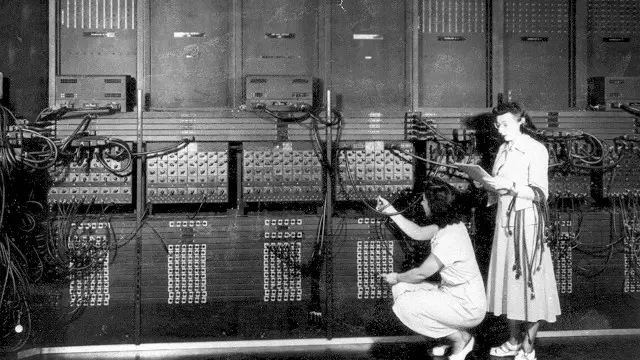

First General-Purpose Digital Computer

1945: ENIAC (Electronic Numerical Integrator and Computer) is completed by professors John Mauchly and J. Presper Eckert. ENIAC was absolutely massive, consisting of more than 17,000 vacuum tubes, 70,000 resistors, and 10,000 capacitors, filling a 30′ x 50′ room and weighing around 60,000 pounds. It was the first general-purpose digital computer in history and was extremely capable of a computer at the time. It’s said that for the first decade that ENIAC was in operation, it completed more calculations than in all of history previously.

First Computer Transistor

1947: William Shockley of Bell Labs invented the first transistor and drastically changed the course of computing history. The transistor replaced the common vacuum tube which allowed computers to be much more efficient while still greatly reducing their size and energy requirements.

First General-Purpose Commercial Computer

1951: Professors John Mauchly and J. Presper Eckert built UNIVAC (Universal Automatic Computer), the first general-purpose commercial computer in history. The early UNIVAC models utilized 5,000 vacuum tubes but later models in the series adopted transistors. It was a massive computer weighing around 16,000 pounds. However, the massive size allowed for more than 1,000 computations per second.

First Computer Programming Language

1954: A team at IBM led by John Backus created the first commercially available general-purpose computer programming language, FORTRAN. FORTRAN stands for Formula Translation and is still used today. When the language first appeared, however, there were bugs and inefficiencies which led people to speculate on the commercial usability of FORTRAN. Yet, the bugs were worked out many of the programming languages that came after were inspired by FORTRAN.

First Computer Operating System

1956: The first computer operating system in history was released in 1956 and produced by General Motors, called the GM-NAA I/O . It was created by Robert L. Patrick and allowed for direct input and output, hence the name. It also allowed for batch processing: the ability to execute a new program automatically after the current one finishes.

First Integrated Circuit

1958: Jack Kilby and Robert Noyce create the first integrated circuit , commonly known as a microchip. An integrated circuit consists of electronic circuits mounted onto a semiconductor. The most common semiconductor medium is silicon, which is where the name ‘ Silicon Valley ‘ comes from. If not for the integrated circuit, computers would still be the size of a refrigerator, rather than the size of a credit card.

First Supercomputer

1964: History’s first supercomputer, known as the CDC 6600 , was developed by Control Data Corp. It consisted of 400,000 transistors, 100 miles of wiring, and used Freon for internal cooling. Thus, the CDC 6600 was able to reach a processing speed of up to 3 million floating-point operations per second (3 megaFLOPS). Amazingly, this supercomputer was ten times faster than the fastest computer at the time and cost a whopping $8 million.

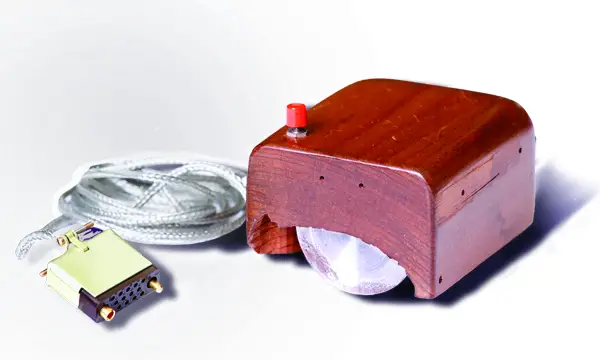

First Computer Mouse

1964: Douglas Engelbart invented the first computer mouse in history but it wouldn’t accompany the first Apple Macintosh until 1984. The computer mouse allowed for additional control of the computer in conjunction with the keyboard. These two input devices have been the primary source of user input ever since. However, voice commands from present-day smart devices are increasingly becoming the norm.

First Wide Area Computer Network

1969: DARPA created the first Wide Area Network in the history of computers called ARPAnet which was a precursor to the internet . It allowed computers to connect to a central hub and interact in nearly real time. The term “internet” wouldn’t come around until 1973 when computers in Norway and England connect to ARPAnet. Although the internet has continued to advance through the decades, many of the same protocols from ARPAnet are still standards today.

First Personal Computer

1971: The first personal computer in history, the Kenbak-1 , is created by John Blankenbaker, and sold for only $750. However, only around 40 of these computers were ever sold. As small as it was, it was able to execute hundreds of calculations in a single second. Blankenbaker had the idea for the personal computer for more than two decades before completing his first one.

First Computer Microprocessor

1971: Intel releases the first microprocessor in the history of computers, the Intel 4004 . This tiny microprocessor had the same computing power as the ENIAC computer and was the size of an entire room. Even by today’s standards, the Intel 4004 is a small microprocessor, housed on a 2-inch wafer as opposed to today’s 12-inch wafers. That said, the initial model had only 2,300 transistors while it’s not uncommon for today’s microprocessors to have several hundred million transistors.

First Apple Computer

1976: Apple takes the stage and releases its first computer: the Apple-1 . The Apple-1 was different from other computers at the time. It came fully assembled and on a single motherboard. It sold for nearly $700 and had only 4 KB of memory, which is almost laughable compared to today’s standards. However, that was plenty of memory for the applications at the time.

First IBM Personal Computer

1981: IBM launches its first personal computer, the IBM Model-5150 . It only took a year to develop and cost $1,600. However, that was a steep drop from other IBM computers before this that sold for several million dollars. The IBM Model-5150 had only 16 KB of RAM when it was first released, but eventually increased to up to 640 KB maximum RAM.

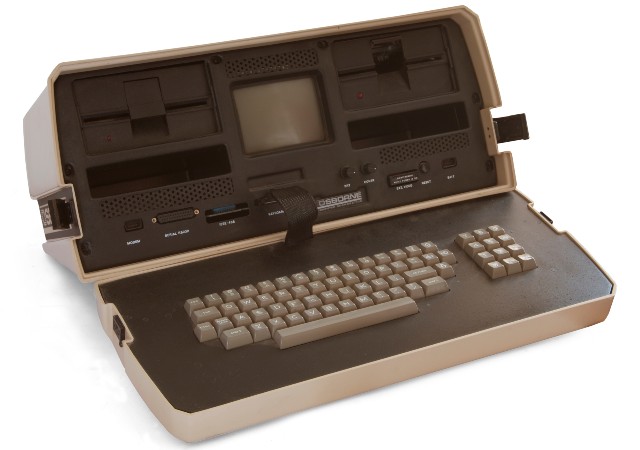

First Laptop Computer

1981: The first laptop in the history of computers, the Osborne 1 , was released by the Osborne Computer Corporation. It had an incredibly small 5-inch display screen, a bulky fold-out keyboard, 64 KB of main memory, and weighed 24 pounds. Not surprisingly, the Osborne 1 was actually very popular, selling more than 125,000 units in 1982 alone. The going rate for an Osborne 1 was $1,795.

First Windows Operating System

1985: Microsoft released its first version of the Windows operating system, Windows 1.0 . What made Windows 1.0 remarkable was its reliance on the computer mouse which wasn’t standard yet. It even included a game, Reversi, to help users become accustomed to the new input device. Love it or hate it, the Windows 1.0 operating system and its subsequent versions have become commonplace among computers ever since its creation. The development of the original Windows OS was led by none other than Bill Gates himself.

World Wide Web Is Created

1989: The World Wide Web is created by Sir Tim Berners-Lee of CERN . When it was first created, it wasn’t intended to grow into a massive platform that would connect the average person. Rather, it was originally just intended to easily share information between scientists and universities. The first website in the history of computers was actually just a guide to using the world wide web.

First Flash-Based Solid State Drive

1991: The first flash-based solid-state drive was created by SanDisk (at the time it was called SunDisk). These drives presented an alternative option to hard drives and would prove to be very useful in computers, cell phones, and similar devices. This first flash-based SSD had 20 MB of memory and sold for approximately $1,000.

First Smartphone Is Created

1992: IBM created the first-ever smartphone in history, the IBM Simon , which was released two years later in 1994. It was a far cry from the smartphones we’re used to today. However, at the time, IBM Simon was a game-changer. It sold for $1,100 when it was first released and even had a touchscreen and several applications including mail, a calendar, a to-do list, and a few more.

First Platform Independent Language

1995: Sun Microsystems releases the first iteration of the Java programming language . Java was the first computer programming language in history to be platform-independent, popularizing the phrase: “Write once, run anywhere.” Unlike other computer programming languages at the time, a program written with Java could run on any device with the Java Development Kit (JDK).

First Smartwatch Is Released

1998: The first-ever smartwatch , the Ruputer, was released by the watch company Seiko. If you look at the original Ruputer, you’ll see that it really doesn’t look much different than present-day smartwatches with the exception of a better display and minor styling changes. As it wasn’t a touchscreen, a small joystick assisted with navigating the various feature of the watch.

First Quantum Computer

1998: After decades of theory, the first quantum computer is created by three computer scientists. It was only 2 qubits, as opposed to the 16 qubit reprogrammable quantum computers of recent. This first quantum computer didn’t solve any significant problem, as it wasn’t incredibly efficient. In fact, it ran for only a few nanoseconds. However, it was a proof of concept that paved the way for today’s quantum computers.

First USB Flash Drive

2000: The first USB Flash drive in computer history, the ThumbDrive , is released by Trek, a company out of Singapore. However, there were other flash drives that hit the market almost immediately after, such as I.B.M.’s DiskOnKey, a 1.44 MB flash drive. This led to some speculation as to who was actually first. However, as evidenced by the patent application back in 1999, and the fact that Trek’s ThumbDrive made it to market first, the debate was shortly settled.

DARPA Centibots Project

2002: DARPA launched the Centibots project in which they developed 100 identical robots that could work together and communicate with each other. The Centibots could survey an area and build a map of it in real time. Additionally, these robots could identify objects including their companion robots and people, and distinguish between the two. The maps that they make are incredibly accurate. In total, the Centibots project cost around $2.2 million to complete.

MySpace Comes And Goes

2004: MySpace gained over 1 million users within the first month of its official launch and 22 million users just a year later. Soon after in 2005, it was purchased by News Corp for $580 million. However, shortly after the sale of MySpace, it was fraught with scandal after scandal. MySpace helped to popularize social media, with Facebook trailing right behind it, passing MySpace in users in 2008. It eventually laid off about 50% of its workforce in 2011.

Arduino Is Released

2005: Italian designer Massimo Banzi released the Arduino , a credit card-sized development board. The Arduino was intended to help design students who didn’t have any previous exposure to programming and electronics but eventually became a beloved tool for tech hobbyists worldwide. To this day, Arduino boards are increasingly a part of electronics education including self-education.

iPhone Generation-1 Released

2007: Steve Jobs of Apple released the first-ever iPhone , revolutionizing the smartphone industry. The screen was 50% bigger than the popular smartphones of the time, such as the beloved Blackberry and Treo. It also had a much longer-lasting battery. Additionally, the iPhone normalized web browsing and video playback on phones, setting a new standard across the industry. The cost was what you could expect from an iPhone, selling at around $600, more than twice as much as its competitors.

Apple’s iPad Is Released

2010: Only three years after the iPhone is released, Steve Jobs announces the first-ever iPad , Apple’s first tablet computer. It came with a 9.7-inch touchscreen and options for either 16GB, 32GB, or 64GB. The beauty of the iPad is that it was basically a large iPhone, as it ran on the same iOS and offered the same functionality. The original iPad started at $499 with the 64GB Wi-Fi + 3G version selling for $829.

Nest Thermostat Is Released

2011: The Nest Thermostat , a smart thermostat created by Nest Labs, is released as a growing number of household devices make up the “internet of things.” When the Nest Thermostat was first released, not only did it make thermostats smart, it made them beautiful. For only $250 you could buy a thermostat that decreased your energy bill, improved the aesthetic of your home, and is controlled by your phone.

First Raspberry Pi Computer

2012: The first Raspberry Pi computer is released, opening up a world of possibilities for creative coders. These small yet capable computers cost around 25$-$35 when first released and were as small as a credit card. Raspberry Pi’s were similar to the Arduino in size but differed greatly in their capability. The Raspberry Pi is several times faster than the Arduino and has over 100,000 times more memory.

Tesla Introduces Autopilot

2014: Tesla’s Elon Musk introduces the first self-driving features in its fleet of automobiles dubbed: Autopilot. The future of automobiles chauffeuring their passengers with no input from a driver is finally within sight. The first feature of autopilot included not only camera systems but also radar and sonar in order to detect everything within the car’s surroundings. It also included a self-park feature and even a summoning feature that calls the vehicle to you. The computers and technology within Tesla vehicles have essentially turned them into the first advanced personal transportation robots in history.

Sophia The Robot Is Created

2016: Sophia, the artificially intelligent humanoid robot, was created by former Disney Imagineer David Hanson. A year after her creation, Sophia gained citizenship in Saudi Arabia, becoming the first robot in history to gain citizenship. Since she was created, Sophia was taken part in many interviews and even debates. She’s quite a wonder to watch!

First Reprogrammable Quantum Computer

2016: Quantum Computers have made considerable progress and the first reprogrammable quantum computer is finally complete. It’s made up of 5 singular atoms that act as switches. These switches are activated by a laser beam that controls the state of the qubit. This leap has brought us very close to quantum supremacy.

First Brain-Computer Interface

2019: Elon Musk announces Neuralink’s progress of their brain-machine interface that would lend humans the same information processing abilities that computers have while linking to Artificial Intelligence. In this announcement, Neuralink revealed that they had already successfully tested their technology on mice and apes.

Tesla Nears Fully Autonomous Vehicles

2020: In July, Elon Musk declared that a Tesla autopilot update is coming later this year that will bring their vehicles one step closer to complete “level-5” autonomy . Level-5 autonomy would finally allow passengers to reach their destination without any human intervention. The long-awaited software update would likely increase the company’s value massively and Musk’s net worth along with it.

Elon Musk announces Tesla Bot, becomes Time’s Person of the Year

2021: Musk continues to innovate, announcing in August that Tesla is developing a near-life-size humanoid robot. Many are skeptical of the viability of the robot while others claimed this is another of Musk’s inventions that science fiction warned against similar to the Brain-Computer Interface.

Regardless of any opinions, Musk still had a stellar year. Starship has made progress, Tesla sales are on the rise, and Musk managed to earn the title of Time’s Person of the Year. All the while becoming the most wealthy person on the planet, with a net worth exceeding $250 million.

Facebook changes name to Meta, Zuck announces Metaverse

2021: In October, Mark Zuckerberg made a bold and controversial, yet possibly visionary announcement that Facebook would change its name to Meta. Additionally, he explained the new immersive Virtual Reality world they’re creating that would be built on top of the existing social network, dubbed the Metaverse. Zuckerberg elaborated that the technology for the immersive experience he envisions is mostly here but mainstream adoption is still 5 to 10 years out . However, when that time comes, your imagination will be your only limitation within the confines of the Metaverse.

IBM’s “Eagle” Quantum Computer Chip (127 Qubits)

2021: IBM continues to lead the charge in quantum computer development and in November, they showcased their new “Eagle” chip . This is currently the most cutting-edge quantum chip in existence, packing 127 qubits, making it the first to reach over 100 qubits. IBM plans to create a new chip more than three times more powerful than the “Eagle” by next year, 2022.

OpenAI Releases DALL-E 2

2022: DALL-E, developed by OpenAI, is capable of generating high-quality images from textual descriptions. It uses a combination of deep learning techniques and a large database of images to generate new and unique images based on textual input. DALL-E 2, launched in April 2022, is the second generation of this language model that is trained on hundreds of millions of images and is almost magical in its production of high-quality images in a matter of seconds.

IBM’s “Osprey” Quantum Computer Chip (433 Qubits)

2022: In only a year, IBM has nearly tripled the quantum capacity of its previous “Eagle” chip. The new “Osprey” quantum chip, announced in November, greatly surpasses its predecessor. The IBM Osprey quantum computer chip represents a major advancement in quantum computing technology and is expected to pave the way for even more powerful quantum computers in the future.

ChatGPT Released Upon The World

2022: ChatGPT is launched on November 30 and took the world by storm, amassing over 1 million users in only 5 days! Currently, ChatGPT is powered by GPT3.x, which is the latest version of the AI software. The development of ChatGPT was a significant milestone in the field of natural language processing, as it represented a significant improvement in the ability of machines to understand and generate human language.

The Much Hyped GPT4 Is Finally Released

2023: ChatGPT and many other AI apps run on GPT. As powerful as the GPT3.x was, GPT4 is trained on a much more massive data set and is far more accurate and better at understanding the intentions of the user’s prompts. It’s a giant leap forward from the previous version, causing both excitement and concern from the general public as well as tech powerhouses such as Elon Musk who wants to slow the advancement of AI.

Tim Statler

Tim Statler is a Computer Science student at Governors State University and the creator of Comp Sci Central. He lives in Crete, IL with his wife, Stefanie, and their cats, Beyoncé and Monte. When he's not studying or writing for Comp Sci Central, he's probably just hanging out or making some delicious food.

Recent Posts

Programming Language Levels (Lowest to Highest)

When learning to code, one of the first things I was curious about was the difference in programming language levels. I recently did a deep dive into these different levels and put together this...

Is Python a High-Level Language?

Python is my favorite programming language so I wanted to know, "Is Python a High-Level Language?" I did a little bit of research to find out for myself and here is what I learned. Is Python a...

IMAGES

VIDEO